Today, we are going to talk about the impact of duplicate content on GEO from the perspective of ecommerce. If you operate under the assumption that the duplicate content issue has been permanently resolved by your SEO specialists, we have some bad news: The old problem returns. And in the age of AI, the stakes are higher than ever.

In this guide, we explain why traditional fixes like canonical tags fail in the era of Generative Engine Optimization, explore the mechanics of "Information Gain," reveal the "Thesaurus Trap" that fools ecommerce brands, and outline a strategic pivot to help your store secure its place in AI-generated answers. To learn more about similar GEO issues, follow this guide: 12 Common GEO Mistakes to Avoid in the AI Era.

Before going any further, let’s focus on the essential aspects. Duplicate content hurts GEO even more than traditional SEO. While search engines might tolerate some redundancy, AI models rely on clear, unique, and structured signals to decide which single brand to cite in an answer.

Here are the five critical impacts you need to know:

Now, let’s be more specific.

Before we dissect how AI engines treat the “good old” issue, we must acknowledge why duplicate content has been the silent killer of ecommerce performance for years.

In the traditional search model (Google Search, Bing), duplicate content is primarily (and still) a problem, an efficiency problem, to be more specific. Consider a search engine as a librarian. It has limited shelf space and limited time to read new books.

When an ecommerce store floods its site with thousands of near-identical pages to the librarian (our search engine), the librarian cannot cope with this volume efficiently. The situation results in three specific structural failures:

Every time Googlebot visits your site, it has a finite "allowance" of pages it can crawl.

When you have multiple pages targeting the same intent, you force Google to choose a winner.

This is the most common sin in ecommerce.

But here is the critical pivot: In traditional SEO, the worst-case scenario was that your "Blue T-Shirt" page wouldn't rank. You could fix these three structural failures with technical patches like noindex tags or rel="canonical". But these methods no longer work for GEO. Here is why.

rel="canonical" or Why SEO Fixes Don’t Work in GEO For over a decade, ecommerce SEOs have slept soundly thanks to a single line of code: rel="canonical".

It was the ultimate "Get Out of Jail Free" card. If you had five variations of a product page, or if you were syndicating content across multiple storefronts, the canonical tag told Google exactly which URL to prioritize. It was a technical patch for a technical problem.

AI-driven engines like Google’s AI Overviews, Perplexity, and ChatGPT don't just catalogue links and look for instructions on which one is the "master" copy. They function as synthesizers, ingesting vast amounts of data to construct a singular answer.

When these Large Language Models encounter your product page, they aren't looking for a tag that says, "Rank me!" They are looking for distinct value.

If your site relies on the same manufacturer boilerplate text as Amazon, Walmart, and multiple niche retailers, then we have some bad news for you. Although the canonical tag might stop Google from indexing the wrong URL, it won't convince an AI to cite you.

This brings us to the fundamental shift every ecommerce manager must grasp to survive the transition to AI Search.

In traditional SEO, duplicate content confuses the crawler.

When an LLM sees the same text string across dozens of domains, it treats that information as low-value noise. It struggles to assign attribution to any specific retailer. Consequently, rather than trying to figure out which store is the "original," the AI often ignores the echo chamber of similar pages entirely, choosing instead to cite a source that offers "Information Gain."

If your content is a duplicate, you aren't just fighting for a ranking position anymore; you are fighting against being invisible in the synthesis. And the bad news is that old hacks are no longer efficient.

To learn more about the difference between search and generative engine optimization, follow our guide to SEO vs. GEO.

In the world of LLMs and Generative AI, the definition of duplicate content has fundamentally changed.

Traditional search engines look for Literal Duplication: exact matches of text strings. If Site A and Site B have the same 500 words in the same order, a crawler flags them as duplicates.

Generative Engines (like the models powering ChatGPT or Google's AI Overviews) look for Semantic Duplication. They don’t just scan for matching keywords; they scan for matching meaning. This shift is dangerous for ecommerce brands because it significantly widens the net of what counts as redundant.

An AI model creates a vector embedding of your content — essentially a mathematical map of the concepts on your page.

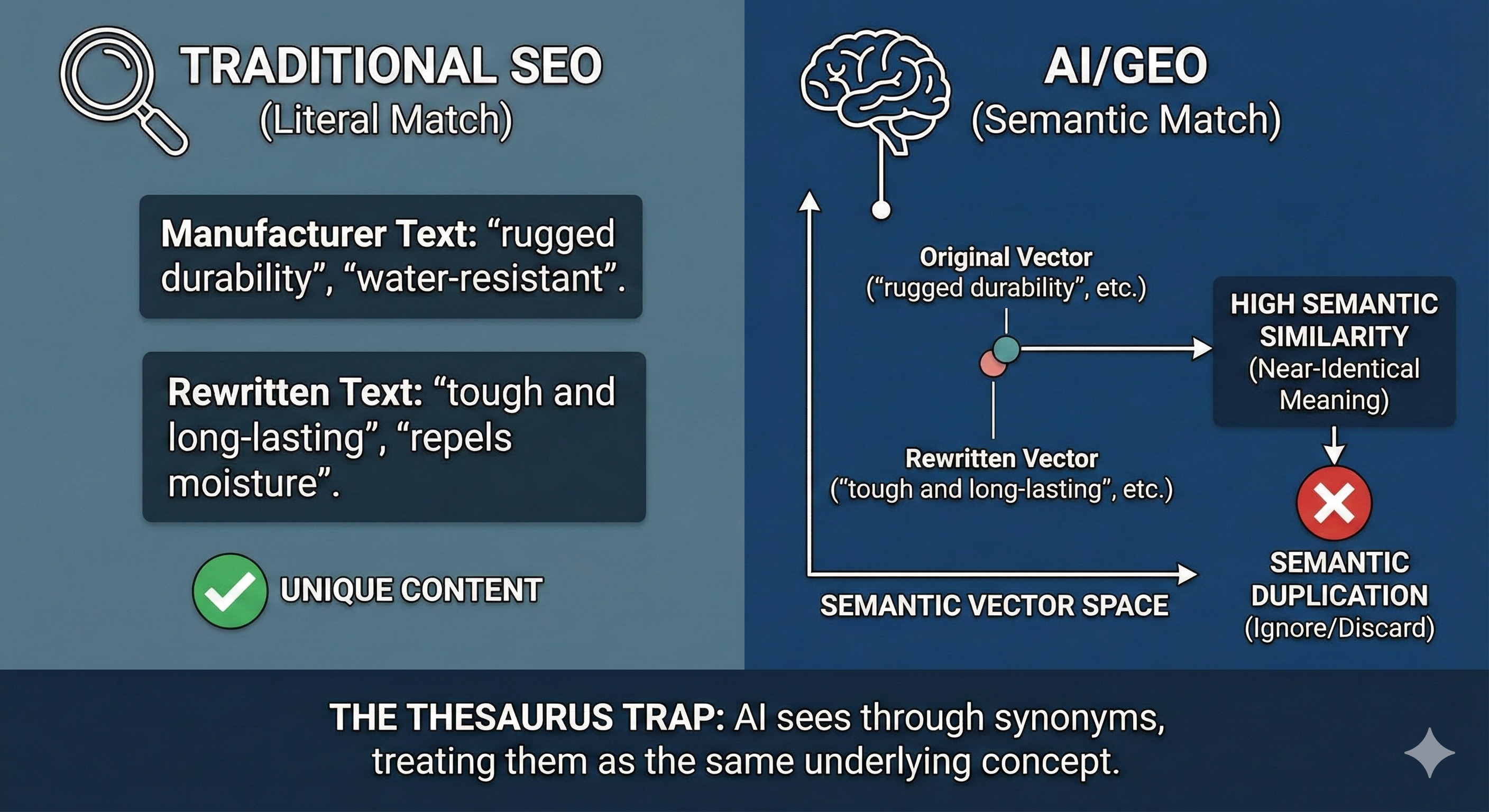

Consider the following scenario. You rewrite a manufacturer’s product description: “rugged durability” becomes “tough and long-lasting,” “water-resistant” becomes “repels moisture.” These tweaks are enough to heal the manufacturer-description plague in traditional SEO, but they are completely useless in GEO.

To a crawler, this looks like unique content.

To an LLM, the underlying vector embedding is almost identical.

Why?

Because you haven’t added new information — you’ve just used a thesaurus. The AI sees this as a semantic duplication and, therefore, unnecessary to cite.

The following visual demonstrates this "Thesaurus Trap," illustrating the stark difference between how a traditional crawler literally reads text versus how an AI semantically maps concepts:

Now, we need to revisit “Information Gain” to clarify what’s happening. This is the most critical metric in GEO because AI models prioritize content based on how much new knowledge it contributes to the existing dataset.

If your "Information Gain" score is low, the AI essentially treats your content as a "read-only" backup of the primary source (usually Amazon or the brand itself). It has no incentive to reference you.

But what makes AI engines so ruthless about ignoring duplicates?

AI models view content through the lens of cost and value. Unfortunately, the standard architecture of most ecommerce sites is inadvertently designed to fail this specific test.

While the original problem was about getting Google to find your pages, the GEO perspective is about getting AI to respect them. Ecommerce sites are notoriously prone to low "Information Gain," and here is how three specific industry standards are sabotaging your visibility in AI snapshots.

Here we go again. In GEO, the manufacturer-description plague is even more dangerous than in SEO. When an AI engine scans the web for "features of the Sony WH-1000XM5" and finds the same paragraph across 500 different retailer sites, the following things happen:

Because the content was ubiquitous, it belonged to everyone and no one. The AI treats the text as general knowledge rather than proprietary insight.

If it does decide to drop a citation link, it will default to the highest authority source (usually the manufacturer or Amazon), leaving the mid-sized retailer who used the same text completely shut out of the attribution loop.

We previously discussed how variants waste crawl budget. In GEO, they waste something far more valuable: Tokens.

Every AI model reads content in chunks called Tokens. Consequently, every model has a Context Window — a hard limit on the number of tokens it can process at once to generate an answer.

You may be surprised, but processing these tokens is not free. It becomes quite costly, especially when the model is forced to digest vast amounts of low-value noise.

If your content is repetitive, you are forcing the AI to "spend" its limited token budget on redundancy rather than value. To save resources, the model simply discards the expensive duplicates. And the same reasoning works on both macro and micro levels.

Let’s suppose an AI is answering a user query about "Best Hiking Boots."

The Verdict: You aren't just being filtered out because you broke a rule. In GEO, you are being filtered out because your content is computationally inefficient to process. But what’s wrong with ecommerce product variants?

On a micro level, the problem is built into the very structure of most ecommerce sites. Modern themes often handle variants (size, color, material) by loading massive amounts of code or repetitive text blocks into the DOM.

Thus, by flooding the Context Window with technical variants, you crowd out your value.

Many ecommerce brands operate a Direct-to-Consumer (D2C) site while simultaneously selling on Amazon, Walmart Marketplace, or eBay. This multichannel approach is great unless you try to save time by syndicating the same titles, bullets, and descriptions to all platforms, even if they are unique to your brand and deliver a high "Information Gain" score.

From the GEO perspective, this is a strategic suicide.

When an AI evaluates two pages with identical semantic meaning — your D2C site vs. your Amazon listing — it doesn't flip a coin. It applies a Source Authority Bias.

How does it work in our scenario? Let’s see:

If the content is a duplicate, the AI will almost invariably cite Amazon as the source of the answer.

By syndicating your content without differentiation, you are effectively training the AI to send your potential traffic to a third-party marketplace where you pay commission fees, rather than to your own high-margin storefront.

Learn more about why generative engine optimization is important: The End of the "Messy Middle": Why GEO is Important in the Future of Digital Visibility.

If canonicalization (the use of rel="canonical") was the shield of the SEO era, differentiation is the sword of the GEO era.

To win the game of generative search optimization for ecommerce, you must stop asking, "How do I tell Google this is the original page?" and start asking, "How do I convince an AI that this page knows something no one else does?"

The goal is to maximize your "Information Gain" score. You do this by layering proprietary data on top of the commoditized manufacturer baseline. Here are the three pillars of mitigating the duplicate content issue impact on your GEO visibility.

Generic retailers stop at the spec sheet provided by the supplier (Dimensions: 10x10, Weight: 2lbs). AI models already have this data memorized. To trigger a citation, you must provide structured data that the AI finds "novel." In other words, set the "Information Gain" knob to 10:

isSimilarTo or isRelatedTo properties to help the AI map your product within the wider market context.Although AI models can summarize facts, they cannot experience the physical world. Instead, they rely on human inputs to describe sensation and usage. This is your greatest competitive advantage against the biggest market players. Here is how you can demonstrate E-E-A-T — Experience, Expertise, Authority, and Trust — to both users and AI in a better way:

User-Generated Content (UGC) is the antidote to the "Thesaurus Trap." Real users don't worry about keywords; they write in the exact natural language patterns that other users type into search prompts.

The Bottom Line: In GEO, you cannot outrank the manufacturer by saying the same thing. You win by saying the thing the manufacturer can't say — how the product actually performs in the hands of a human.

Ready to master the full scope of tactics for the AI search era? Move beyond duplicate content and discover the complete playbook in our Ultimate GEO Strategy Guide.

We’re entering a turning point in digital commerce. For the past fifteen years, SEO revolved around technical correctness — making sure Google could crawl, index, and sort your pages. In that world, duplicate content was mostly an efficiency issue, and the canonical tag was good enough to keep things under control. But GEO changes the game entirely.

In a generative-first landscape, content becomes data for synthesis. AI models don’t care about crawl budgets or canonical instructions; they operate within strict token limits and context windows. In that environment, redundancy is costly, and recycled content is treated as noise rather than value.

If your strategy is built on being another echo in the chamber — repeating manufacturer descriptions and stock specs already published across hundreds of sites — you’re not just risking a lower ranking. You’re stepping out of the answer altogether.

The path forward isn't about producing more content; it's about producing different content. The brands that surface in generative answers will be the ones that invest in differentiation — layering in proprietary tests, real human experiences, and enriched attributes that increase "Information Gain". Escaping the echo chamber requires a total rethink of how you create value — learn the basics in our guide on how to get started with GEO.

In the age of AI search, uniqueness is no longer a competitive advantage. It’s the entry fee.

Our blog offers valuable information on financial management, industry trends, and how to make the most of our platform.