In 2026, the way we access information has fundamentally shifted. Answer engines have largely replaced the traditional list of links, moving the burden of synthesis from the human to the machine. You no longer need to analyze dozens of articles to extract what you need. AI promises to deliver the answer directly — clean, fast, and confident.

At least, that is the promise.

While this “messy middle" of searching has disappeared, it has been replaced by a new risk: the illusion of certainty. In this article, we explain why AI-generated answers should not be blindly trusted in 2026 — nor in the years ahead. You will learn why AI produces incorrect answers, how to evaluate their quality, and how to use answer engines in a way that minimizes hallucinations, bias, and low-quality responses. But before we go further, let’s establish the basics.

To understand the limitations, we first need clear definitions.

No — but they are closely related.

An LLM is the engine.

An answer engine is the machine that includes a brain, memory, retrieval, rules, and interface.

Your machine won’t move without an engine. But an engine alone is neither a machine that can move.

The popularity of AI-generated answers is easy to explain: they dramatically simplify the user experience.

In traditional search, you must:

All that happens between crafting a query and getting an answer is the messy middle. It takes time, attention, and critical thinking.

You do not even need a well-phrased query — AI understands vague, poorly structured input better than most humans do. It scans vast amounts of information and returns a single, confident response within seconds.

As a result, AI-generated answers feel:

But “good enough” is precisely where the problem begins.

Instead of being a detective, you are now an audience member. The machine does the heavy lifting, synthesizes the data, and presents a tidy answer. At least, that’s the sales pitch. But convenience often comes at the cost of the truth. This is why answer engine responses are not always correct:

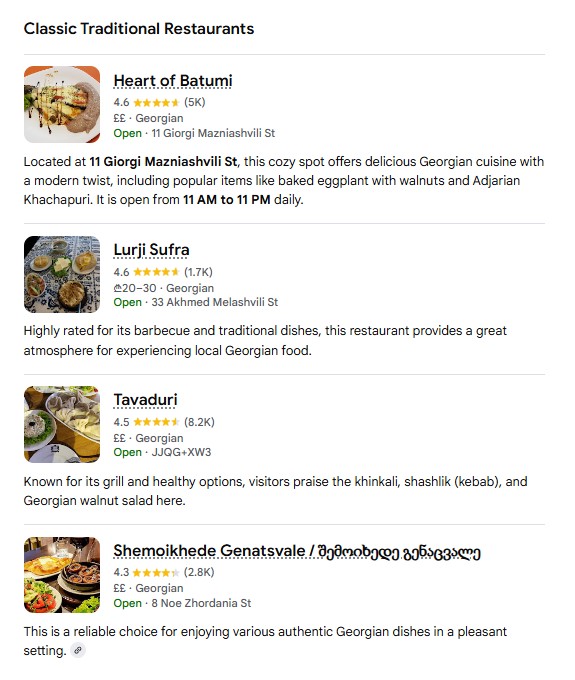

Imagine you are traveling in Georgia and want an authentic local dinner. You ask an answer engine for recommendations.

What do you get?

Highly rated restaurants. Plenty of reviews. Strong online presence.

Not bad — but incomplete.

What you won’t get are the small, underground places locals love. Some have no website, no Instagram, and no SEO. They survive on word of mouth. They are invisible to AI.

The same logic applies beyond travel.

Many high-quality sources:

Imagine you are a specialized engineer looking for the chemical resistance of a specific 2026 polymer. The best data exists in a PDF manual buried on a manufacturer's password-protected portal. Because the AI can't "see" it, it might provide a generic answer based on older, similar materials, leading to a potentially dangerous technical error.

AI cannot cite what it cannot see.

And it cannot prioritize what is not optimized for retrieval.

When information is missing, models do not say “I don’t know.” They improvise.

Ask an answer engine for “10 SEO agencies in Esslingen, Germany,” and you will receive a list of:

In the worst-case scenario, your AI-generated answer will also include borderline fabricated entities. And this is not malicious — it is structural.

The model is optimized to complete the task, not to enforce strict factual boundaries. It would rather stretch definitions than return an incomplete list.

In extreme cases, models invent entities entirely to satisfy numeric or structural constraints.

AI models learn patterns early — and those patterns harden over time.

If an early, authoritative source introduces a flawed concept, it can become a foundational truth inside the model. That’s the early adopter benefit. Correcting the way AI treats this information later becomes exponentially harder.

This is often described as wet cement:

Once it dries, changing the model’s internal preferences becomes difficult, even with better data.

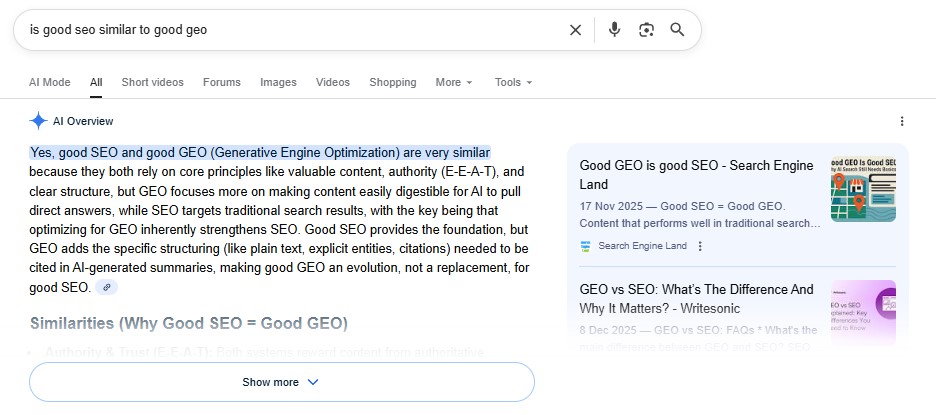

Ask whether “good SEO is the same as good GEO,” and many systems still repeat this claim — despite SEO and GEO being fundamentally different disciplines with different goals, methods, and success metrics. You can read more about that here: Why "Good SEO is Good GEO" is a Dangerous Myth.

This leads to edge-case blindness. While AI handles common scenarios well, it struggles when:

Answer engines rely on retrieved sources — but they cannot reliably judge their quality.

If multiple articles cite the same flawed research, AI interprets repetition as validation. Thus, errors propagate through citation loops.

Consider a situation when a random study is widely referenced. Just because it is good for SEO. However, this study has methodological flaws. AI sees consensus, not critique, and amplifies the error.

Context loss makes this worse.

A sentence pulled from a report about average behavior becomes a definitive claim about all cases. Nuance disappears. Probability turns into certainty.

The result: answers generated by likelihood, not verified truth.

In a world driven by answer engines, a response without a citation is essentially a "trust me" from a black box. While early 2024 models often hallucinated URLs, the problem in 2026 is more subtle: Implicit Knowledge vs. Explicit Sourcing.

Often, an AI will present a conclusion as "common knowledge" when it is actually a specific theory or a disputed fact. Therefore, traceability is non-negotiable:

AI does not have a moral compass; it has a statistical one. It reflects the world not as it is, but as it was described in the data it swallowed. So, you have to deal with these four pillars of AI bias

In 2026, when the internet is full of AI-generated content, we become witnesses to the "Habsburg AI" effect — a feedback loop where LLMs are increasingly trained on texts written by answer engines.

As models increasingly train on AI-generated content, they absorb not only inaccuracies, bias, and false facts, but also a deeper structural flaw known as simplification hardening.

AI systems favor the most statistically probable next word; they gradually avoid rare metaphors, complex sentence structures, and edge-case ideas. Over time, this makes their language smoother and more predictable — but also less precise, less creative, and less capable of expressing nuance.

If you ask an AI to write a legal brief, it will use the most common boilerplate language. Over time, as more lawyers use AI, legal writing becomes a sea of identical, uninspired text. The "smoothness" of the output masks a total loss of creative and intellectual depth.

The result? Answers become more polished and "pleasant" to read, but they lose the jagged edges of truth and the spark of human insight. They become mathematically average.

AI-generated answers are like fast-food delivery: you don’t need to shop for ingredients or spend time cooking. You get something quickly and with minimal effort — but you pay for that convenience with your health.

General search, by contrast, is like cooking at home. It gives you the ingredients, but the final result depends on your choices. If you select quality sources, understand proportions, and take time to prepare the meal, you end up with something healthier and more satisfying. The cost here is time and attention.

Can you survive on fast food alone? Technically, yes — but the long-term consequences are obvious. That is why your primary meals should be home-cooked. Especially when the meal matters, you need to see the ingredients yourself to know whether it is safe to consume.

When you use a general search, you aren't just reading text; you are evaluating an author. You can see if a medical article was written by a Mayo Clinic cardiologist or a freelance ghostwriter for a supplement brand. Answer engines often blend these voices into a single, sterile tone, stripping away the credentials that give information its weight.

AI seeks consensus, but the truth is often found in disagreement. If you search for "The impact of a new 2026 tax law," an AI might give you a balanced summary. However, a general search allows you to read a critique from an economist and a defense from a government official side-by-side. Seeing conflicting viewpoints is the only way to develop a 360-degree understanding of a topic.

Information has a "half-life." During breaking news events — a stock market flash crash, a natural disaster, or a sudden political shift — answer engines can lag. They need time to "digest" and synthesize the data. General search indexes the live web in real-time, showing you the raw footage and primary reports before the AI has had a chance to smooth over the details.

The most valuable information often lives in the "un-optimizable" corners of the web:

Sometimes, you don't know exactly what you’re looking for until you see it. This is "serendipity."

In a general search, every claim has a date, a publisher, and a reputation attached to it. If a journalist gets a story wrong, they issue a correction. If an AI gets a story wrong, it simply generates a different version next time. General search provides an audit trail that allows you to hold information providers accountable.

Perhaps the greatest risk of relying solely on AI is intellectual atrophy. When the machine does the synthesizing, your "analytical muscles" — the ability to spot a logical fallacy, to sense a biased tone, or to cross-reference a suspicious stat — begin to wither.

Synthesizing information yourself is an exercise in judgment. It forces you to ask: Why is this person telling me this? What are they leaving out? These are human skills that no algorithm can replicate.

A recent study on the consequences of LLM-assisted essay writing suggests that frequent reliance on generative AI engines is negatively correlated with critical thinking and analytical ability. The primary driver is cognitive offloading — when users delegate tasks such as summarization, brainstorming, or decision-making to AI, reducing the need for active, independent thought. Over time, this dependence can weaken cognitive resilience, diminish problem-solving skills, and erode the mental habits required for deep analysis.

So what should you do in this situation? The answer is simple:

AI is excellent for orientation and acceleration, but verification still requires human judgment and original sources. Finding — and maintaining — the right balance is not optional; it is the only sustainable approach. And what you should definitely do in this model is to evaluate the quality of the generative engines’ output.

Think of an AI answer as a legal testimony: it may be persuasive, but it is not "the truth" until it has been cross-examined. Use this checklist to separate fact from statistical fiction.

A modern Answer Engine should never just tell you something; it should show you where it found it.

As we’ve already mentioned, the state of being "correct" has a half-life in a rapidly changing world.

Identify the most important sentence in the AI’s response — the "anchor" upon which the rest of the answer depends.

Ironically, the more confident an AI sounds, the more you should doubt it.

To see if the AI truly "understands" the context or is just repeating a pattern, throw a wrench in the gears.

In 2026, Answer Engine Optimization (AEO) is a rapidly growing industry that ensures brands and their products appear as the cited answer inside AI-generated responses. In that narrow sense, the phrase “Good SEO is Good GEO” becomes true: companies invest heavily to ensure you see their products and brands.

We aren't suggesting that you should abandon AI-generated answers. Far from it! In 2026, avoiding AI is like avoiding the calculator in a math class — it’s an unnecessary handicap. However, the danger lies in using these tools blindly. Trusting an answer engine as your primary source of truth is a risk that few professionals can afford to take.

The reality of the modern information landscape is a balance of priorities:

The smartest users in 2026 don't choose one over the other; they understand the strengths of both. They use AI to navigate the vast ocean of data quickly, but they return to traditional search when they need to drop an anchor.

As we navigate 2026 and beyond, the most valuable skill in your professional toolkit isn’t prompt engineering — it’s judgment. Answer engines are magnificent assistants for drafting, summarizing, and brainstorming. But the final "seal of approval" must always be human.

By maintaining your habit of general search and applying a skeptical eye to every AI-generated summary, you ensure that you remain the master of the machine, rather than its most gullible user.

If AI is the new lens through which the world sees information, you cannot afford to be invisible to that lens. This is where Generative Engine Optimization (GEO) becomes vital. You must ensure your brand is not just indexed, but cited and trusted by the models that users rely on.

We offer the first AI-native control plane for ecommerce — a solution that doesn't just automate GEO but optimizes your entire workflow, ensuring your brand stays ahead of the competitors and rapidly reacts to both internal and external changes.

Our blog offers valuable information on financial management, industry trends, and how to make the most of our platform.